Maitreya Patel

Ph.D. Student, School of Computing & AI, Arizona State University.

I am a Ph.D. candidate at Arizona State University (ASU). I am working alongside Yezhou Yang and Chitta Baral.

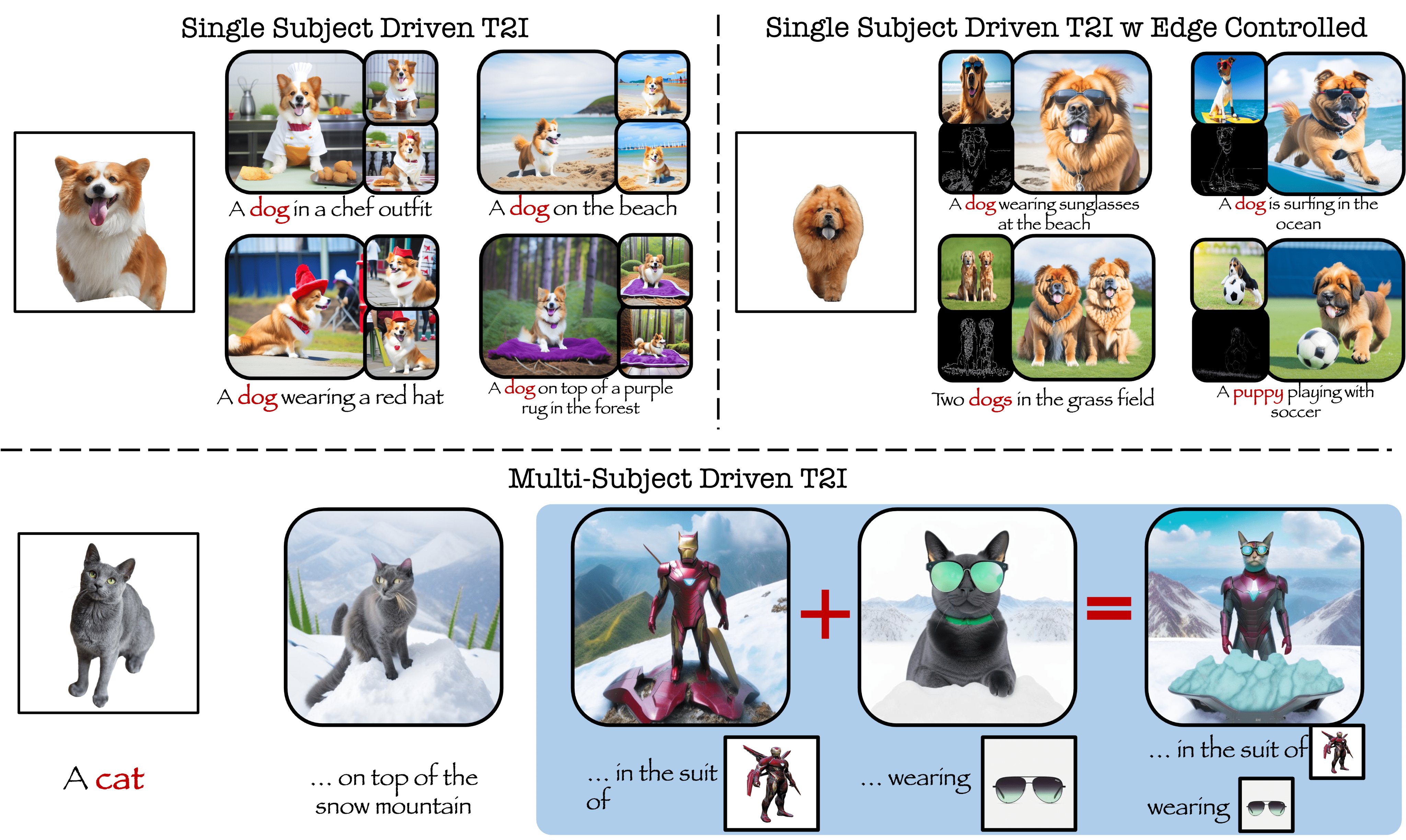

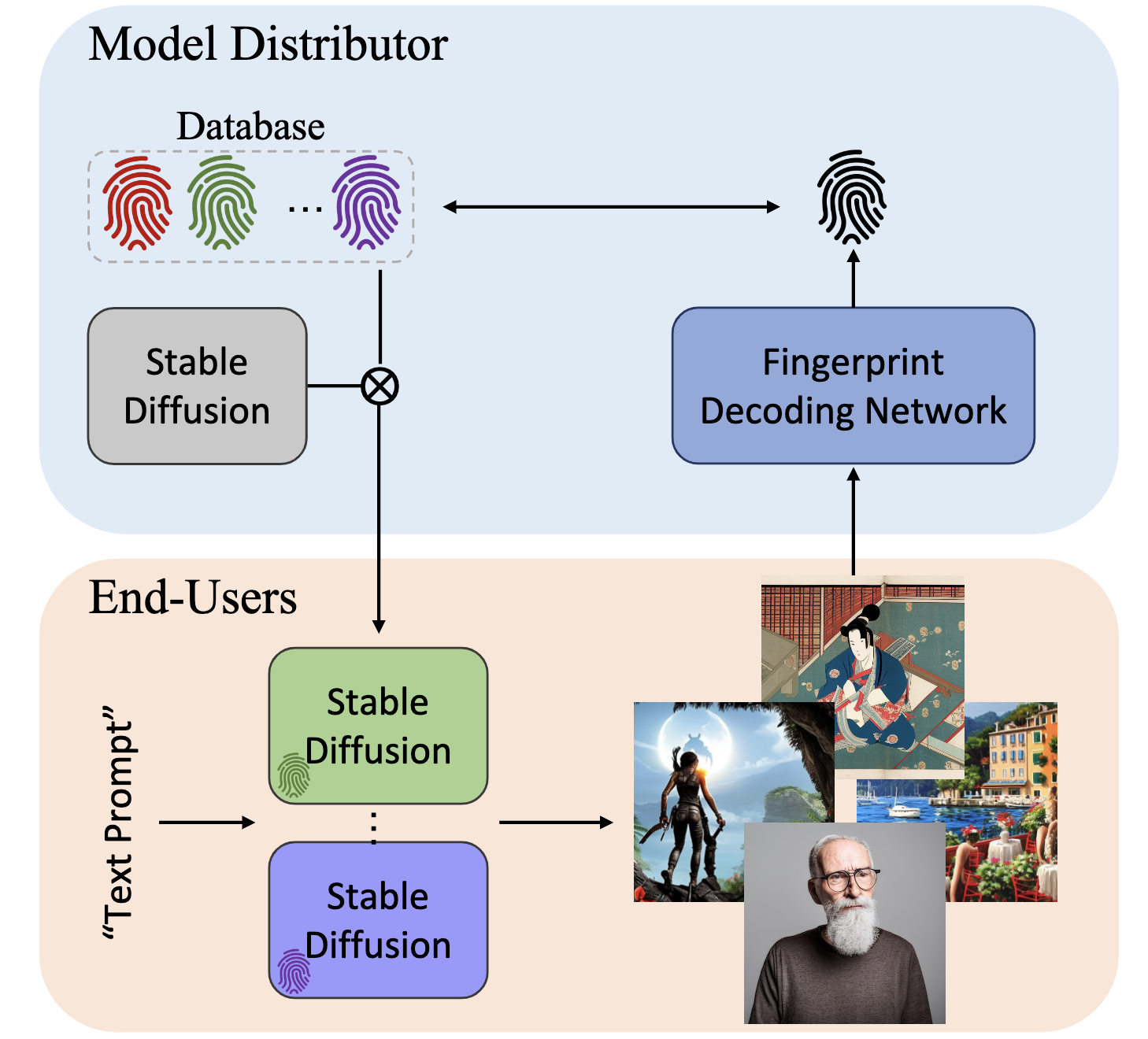

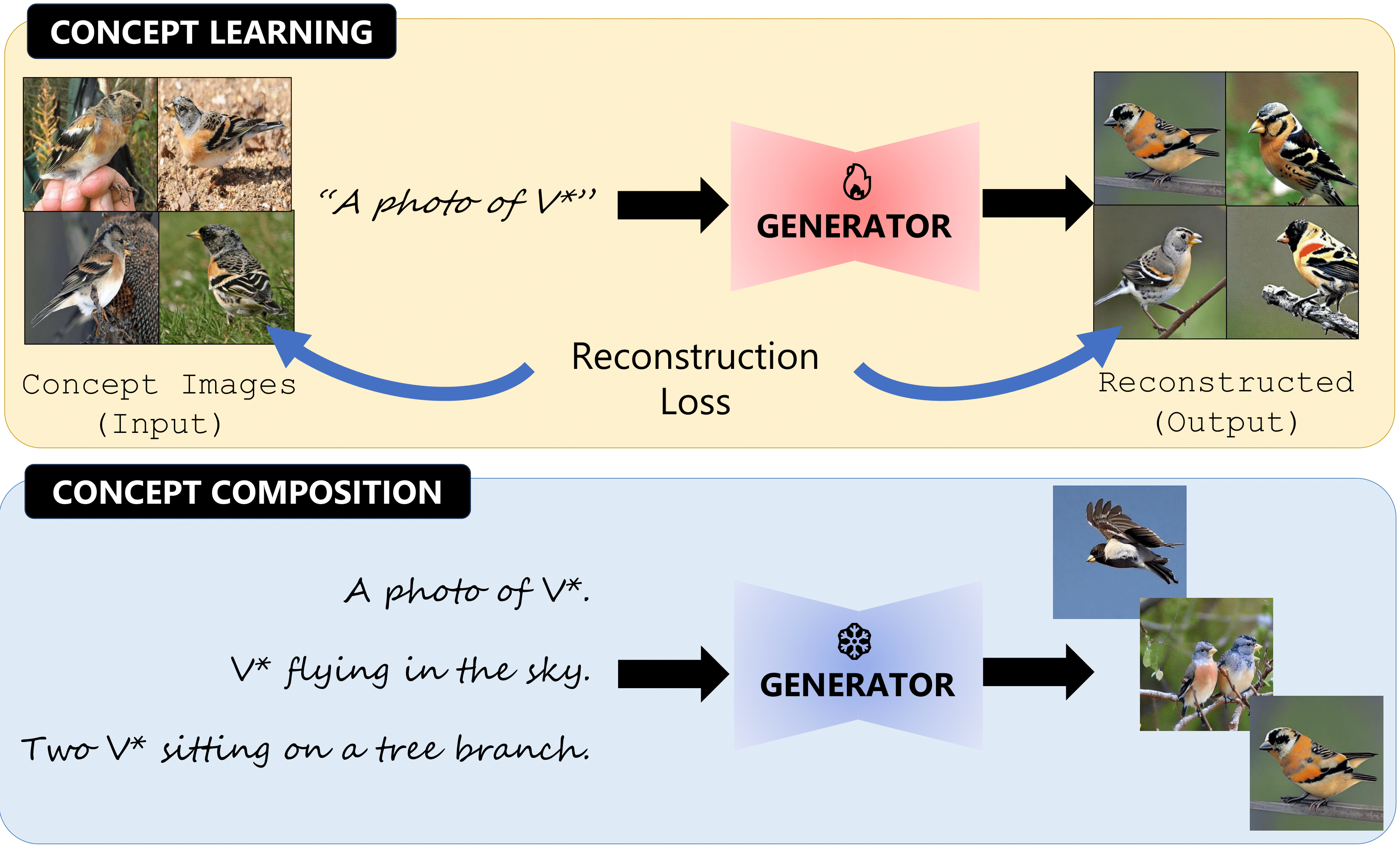

My research focuses on the theoretical foundations of visual generative models and their applications in conditional sampling, including image/video editing, inverse problems, and personalization. I am also interested in representation learning, large-scale multimodal foundational models, and inference-time steering to enhance the controllability and reliability of generative models. I believe true World Models must be generalizable, efficient, controllable, responsible, and grounded in physical laws.

🚀 🚀 Alongside my research, I am writing The Stochastic Journey — a blog series that delves into the mathematical foundations of generative models, tracing their roots in stochastic calculus, probability theory, and differential equations.

I have extensive experience in developing large-scale text-to-image diffusion/flow and unified multimodal models, working across the full development lifecycle including mathematical foundations, model architecture design, pre-training and RL alignment. I focus on advancing both the fundamental understanding and building end-to-end systems that push the boundaries of what’s possible with diffusion and multimodal models.

Note: I am currently not taking on any new students for supervision. However, if you have a well-defined research proposal and can clearly articulate how we might collaborate, I welcome you to reach out.

News

| Sep 18, 2025 | EraseFlow accepted at NeurIPS’25 as Spotlight. |

|---|---|

| Jul 25, 2025 | 🚀🚀 FlowChef and RefEdit are accepted at ICCV 2025! We’ll also host a tutorial. See you at Hawaii! |

| Jun 5, 2025 | 📝 Released RefEdit - a referring expression based image editing framework. Check out our paper and page! ✨ |

| May 19, 2025 | 🎨 Joined Adobe Firefly team as Research Intern; exploring some cool stuff in generative models. 🚀 |

| Mar 11, 2025 | 🖼️ Joined SonyAI (Vision Foundation Model and Generative AI) as Research Intern; working on multimodal generative models. |

Selected Publications

-

-

-

-

-

-

-

-

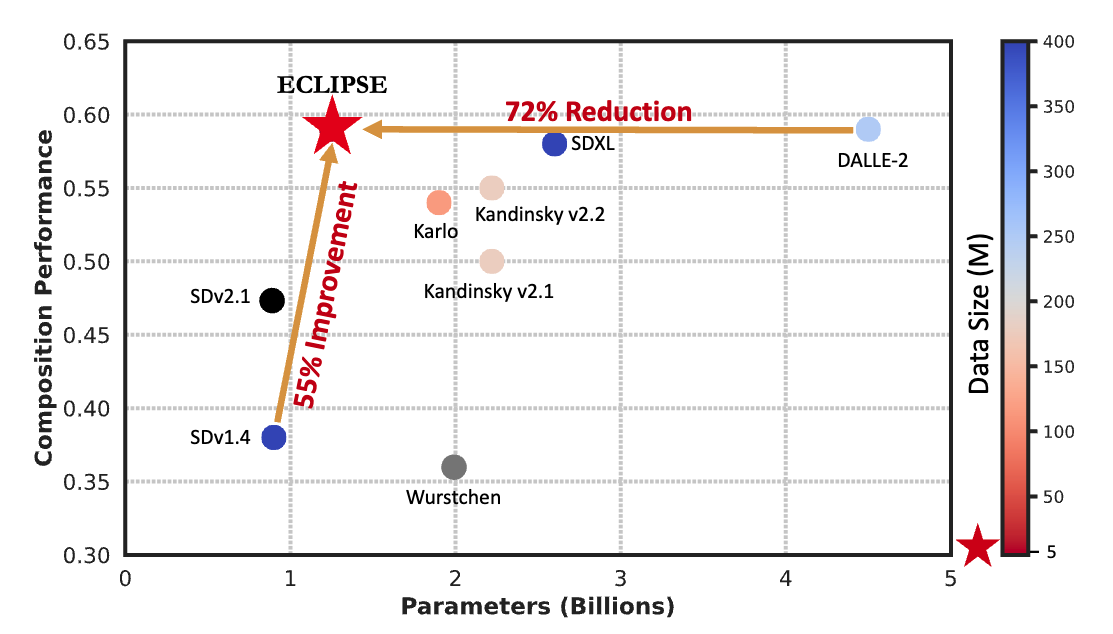

ECLIPSE:A Resource-Efficient Text-to-Image Prior for Image Generations

ECLIPSE:A Resource-Efficient Text-to-Image Prior for Image Generations

In CVPR – 2024

-

-

-

-